Embracing the Role-Play Strategy

Prompt engineering is like giving a smart robot specific instructions to get the best help possible. Imagine you’re asking a super-smart assistant for advice or information. How you ask the question can make a big difference in the kind of answer you get back. One cool trick to get even better answers is by pretending the robot is someone else, like a famous scientist, a helpful librarian, or even a detective. This trick is called “assigning roles,” and it’s a great way to make the assistant’s answers more interesting and useful.

Prompt engineering is just a fancy term for figuring out the best way to ask your AI assistant questions. The goal is to ask questions in a way that the assistant not only understands what you’re asking but also gives you the best possible answer. It’s a bit like knowing exactly how to ask your friend for a favor so they’ll say yes.

Hi Copilot, Act as [role]

At its heart, telling Microsoft Copilot, “Hi Copilot, act as [role],” is like giving it a part in a play. You set the stage, define the character, and the AI steps into those shoes, adopting the persona, expertise, or perspective of the role you’ve assigned. Whether it’s an expert in taxes, search engine optimization or cyber security, this approach tailors the AI’s responses to fit the specific needs and context of your query.

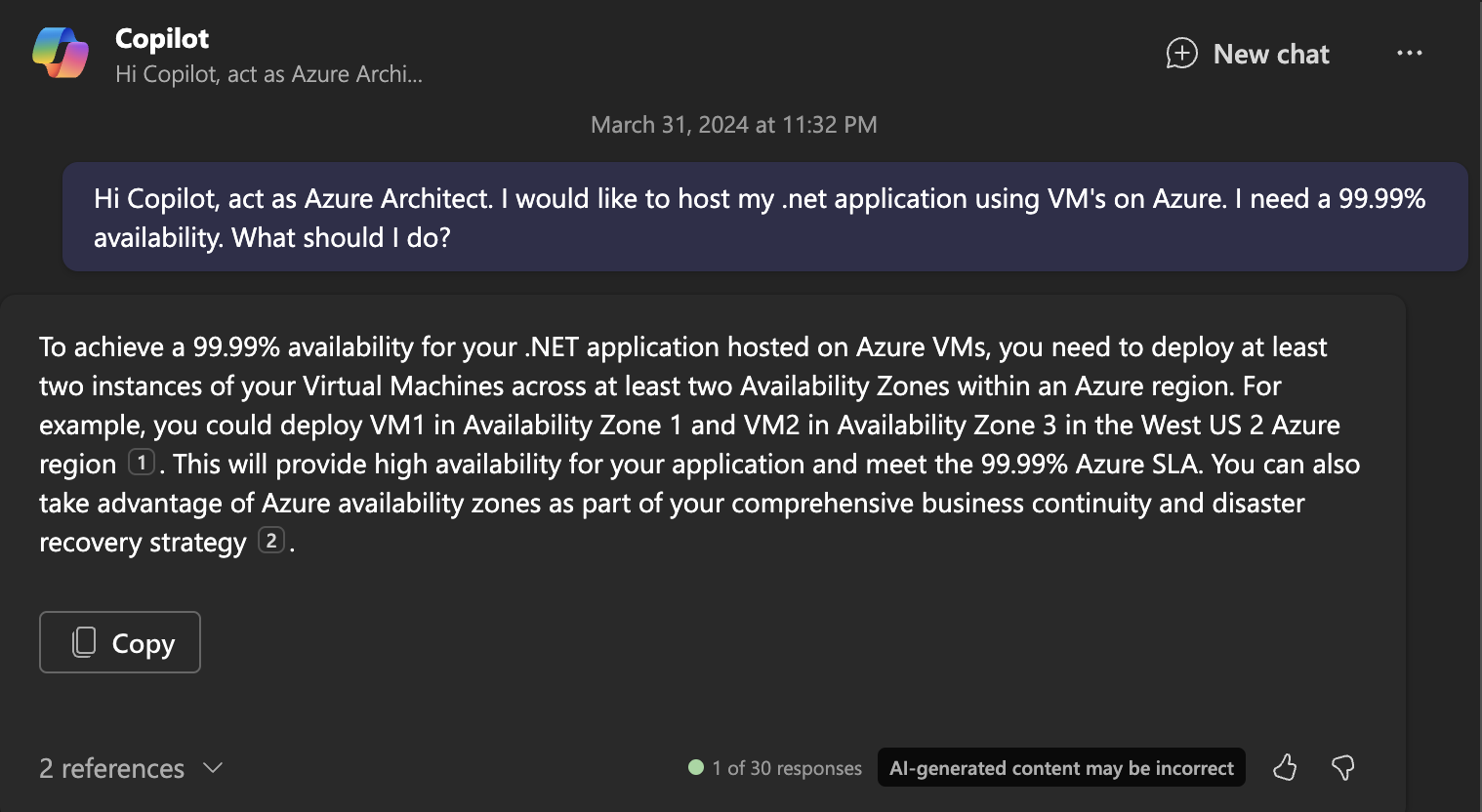

Example: Azure Architect

Microsoft 365 Copilot is perfect aware of Microsoft Azure (not the resources in your Azure environment, but the concept of Microsoft Azure). Therefore Copilot can perfectly help you with Azure architecting work. Here are some example prompts:

- Hi Copilot, act as Azure Architect. I would like to host my .net application using VM’s on Azure. I need a 99.99% availability. What should I do?

- Hi Copilot, act as Azure Architect. I would like to have my application (hosted) on VM’s protected against DDOS attacks. What should I do?

- Hi Compilot, act as Azure Architect. I am a dutch healtcare organisation and need to follow the dutch law. What Azure regions should I use?

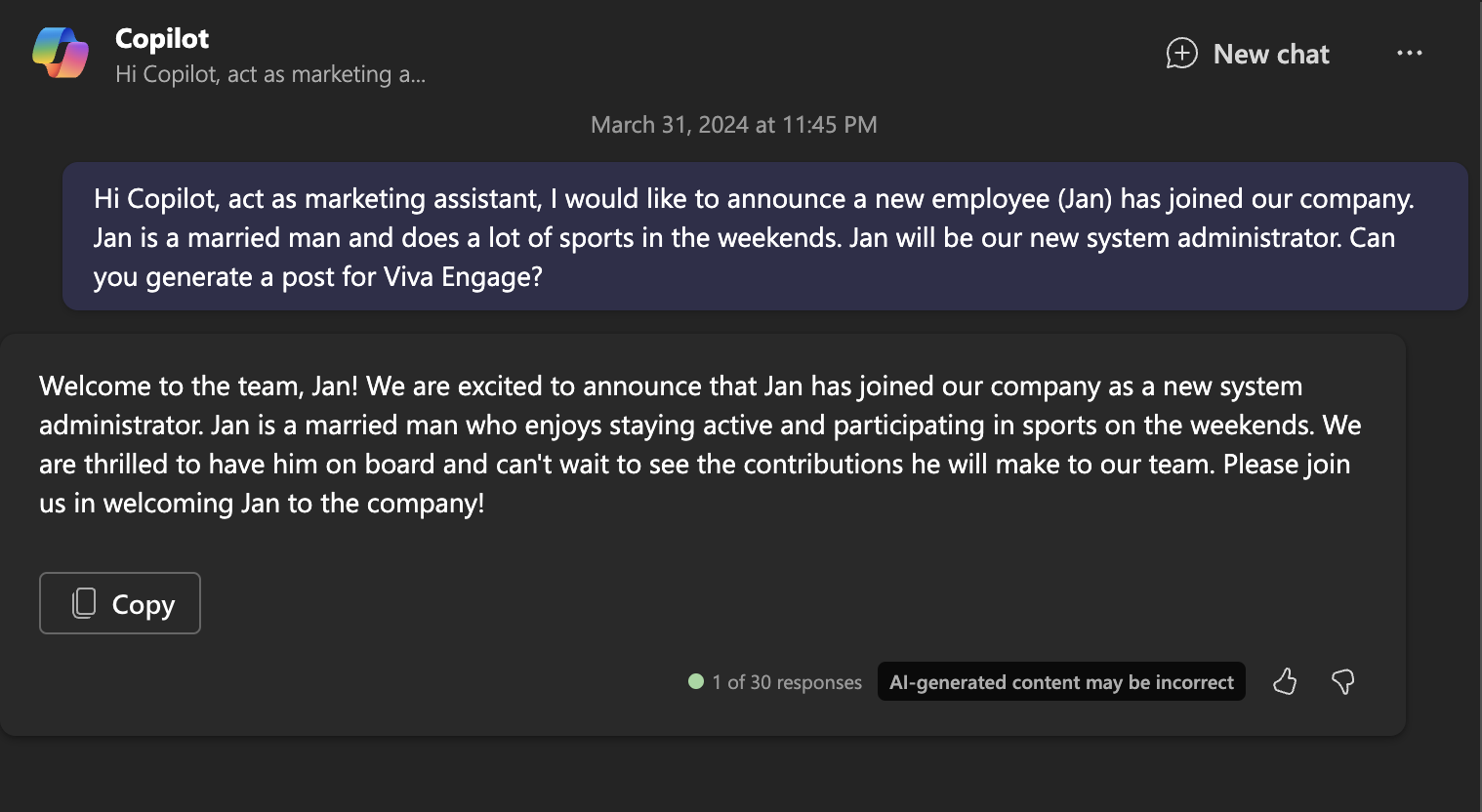

Example: Marketing Assistant

Microsoft 365 Copilot can be your helpful marketing assistant. By telling Copilot is should act as marketing assistant, it serves as a perfect budy for various marketing tasks. Here are some example prompts:

- Hi Copilot, act as marketing assistant, I would like to announce a new employee (Jan) has joined our company. Jan is a married man and does a lot of sports in the weekends. Jan will be our new system administrator. Can you generate a post for Viva Engage?

- Hi Copilot, act as marketing assistant. I have a text. Can you rewrite this text to be SEO optimized. Here is the text: “[put text here]“

- Hi Copilot, I have a generic sales text for my product. Can you rewrite my text so it is tailored to the finance market? Here is the text: “[put text here]“

Less hallucinating

When AI “hallucinates,” it’s not seeing things, but rather giving out information that might not be accurate or even making up facts that don’t exist. This happens because AI tries to fill in gaps in what it knows with guesses, and sometimes these guesses are way off. It’s like if you asked someone about a book they hadn’t read, and instead of saying they don’t know, they try to make up a story about it. AI does this in an attempt to be helpful, but it can lead to confusion if the made-up information is taken as fact. That’s why it’s important to double-check the facts when using AI, especially for important tasks or decisions.

Assigning a role to Copilot helps prevent hallucinations by narrowing down Copilot’s focus to a specific area of expertise or character. Imagine you’re asking a friend to give advice, but instead of asking them about anything and everything, you ask them to give advice based on their expertise as a chef, a teacher, or a computer programmer. This way, your friend stays within what they know best, reducing the chances of them giving you incorrect or made-up information. Similarly, when you tell AI (like Copilot) to act like a historian or a scientist, it focuses on pulling information related to those roles. This focus helps the AI avoid making guesses outside its set role, reducing the chances of producing incorrect or fabricated answers. It’s a bit like putting guide rails on a path, keeping the AI on track and within the bounds of reliable and relevant information.

Conclusion

To sum up, think of using roles with Copilot like having a superpower in how we interact with technology. For instance, when we tell Copilot to act as an Azure architect, it focuses on giving us the best advice on cloud infrastructure, security, and network designs, much like a seasoned professional in that field would. It sticks to the facts and insights relevant to Azure, greatly reducing the chances of giving us wrong or made-up information. Similarly, if we assign the role of a Marketing assistant to M365 Coplot, it hones in on strategies, campaign ideas, and consumer insights, providing targeted and valuable advice just like a real marketing guru would.

These approaches transform Copilot from a general tool into a specialized ally, whether we’re designing a cloud solution or planning a marketing campaign. It’s like having an expert by your side, ready to dive deep into specifics, making our projects not only more successful but also more exciting.