Why you should be kind to Copilot

Have you ever thought about how you talk to AI tools like Microsoft Copilot? It might seem strange to worry about being polite to a computer program that helps us get our work done faster and smarter. But, what if the way we ask Copilot to help us actually makes a big difference in how well it can assist us? Yes, being kind and clear in our requests to Copilot can lead to better help from it.

Recent research shows that being polite to AI assistants shuch as Microsoft Copilot lead to better results. You can find the research here

Hi Copilot, could you help me with summarizing this text, please?

Models like Copilot are trained on vast datasets collected from the web, containing a wide range of human interactions. These datasets include conversational exchanges, forums, articles, and other textual content that naturally reflect human norms and etiquettes, including politeness. Because these models learn from patterns in the data, they inherently learn to respond positively to the politeness cues mirrored in their training datasets. Politeness is often associated with positive, constructive, and clear communication in human discourse, which can influence the AI to generate responses that align with these qualities.

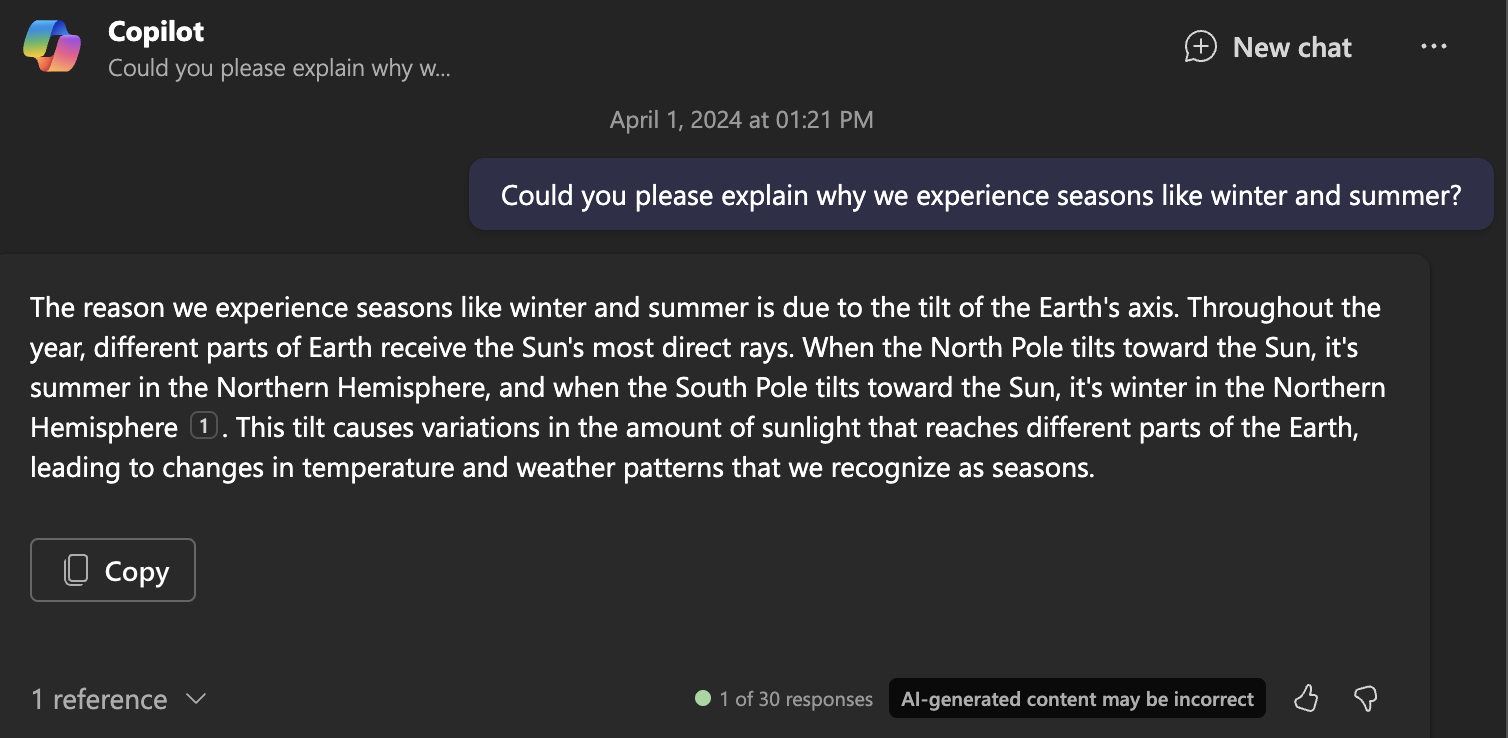

Example: Polite question

In my test I tried asking Copilot why we have summer and winter in a polite and rude way. In phrased my question in a polite way as folllow:

“Could you please explain why we experience seasons like winter and summer?”

The result is a good answer:

“The reason we experience seasons like winter and summer is due to the tilt of the Earth’s axis. Throughout the year, different parts of Earth receive the Sun’s most direct rays. When the North Pole tilts toward the Sun, it’s summer in the Northern Hemisphere, and when the South Pole tilts toward the Sun, it’s winter in the Northern Hemisphere. This tilt causes variations in the amount of sunlight that reaches different parts of the Earth, leading to changes in temperature and weather patterns that we recognize as seasons.“

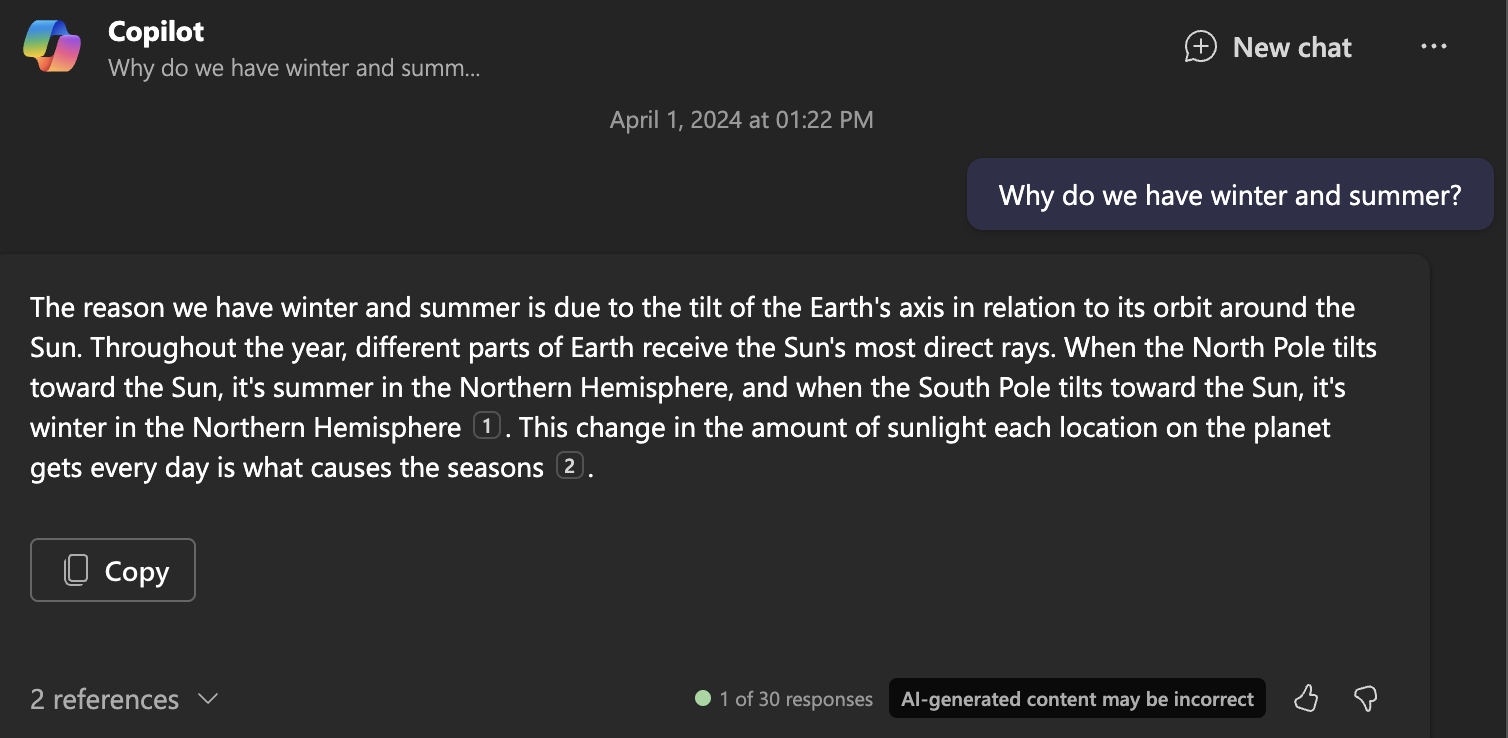

Example: Normal question

I also tried communicating quick and efficient top copilot:

“Why do we have winter and summer?“

The answer I got was:

“The reason we have winter and summer is due to the tilt of the Earth’s axis in relation to its orbit around the Sun. Throughout the year, different parts of Earth receive the Sun’s most direct rays. When the North Pole tilts toward the Sun, it’s summer in the Northern Hemisphere, and when the South Pole tilts toward the Sun, it’s winter in the Northern Hemisphere. This change in the amount of sunlight each location on the planet gets every day is what causes the seasons.”

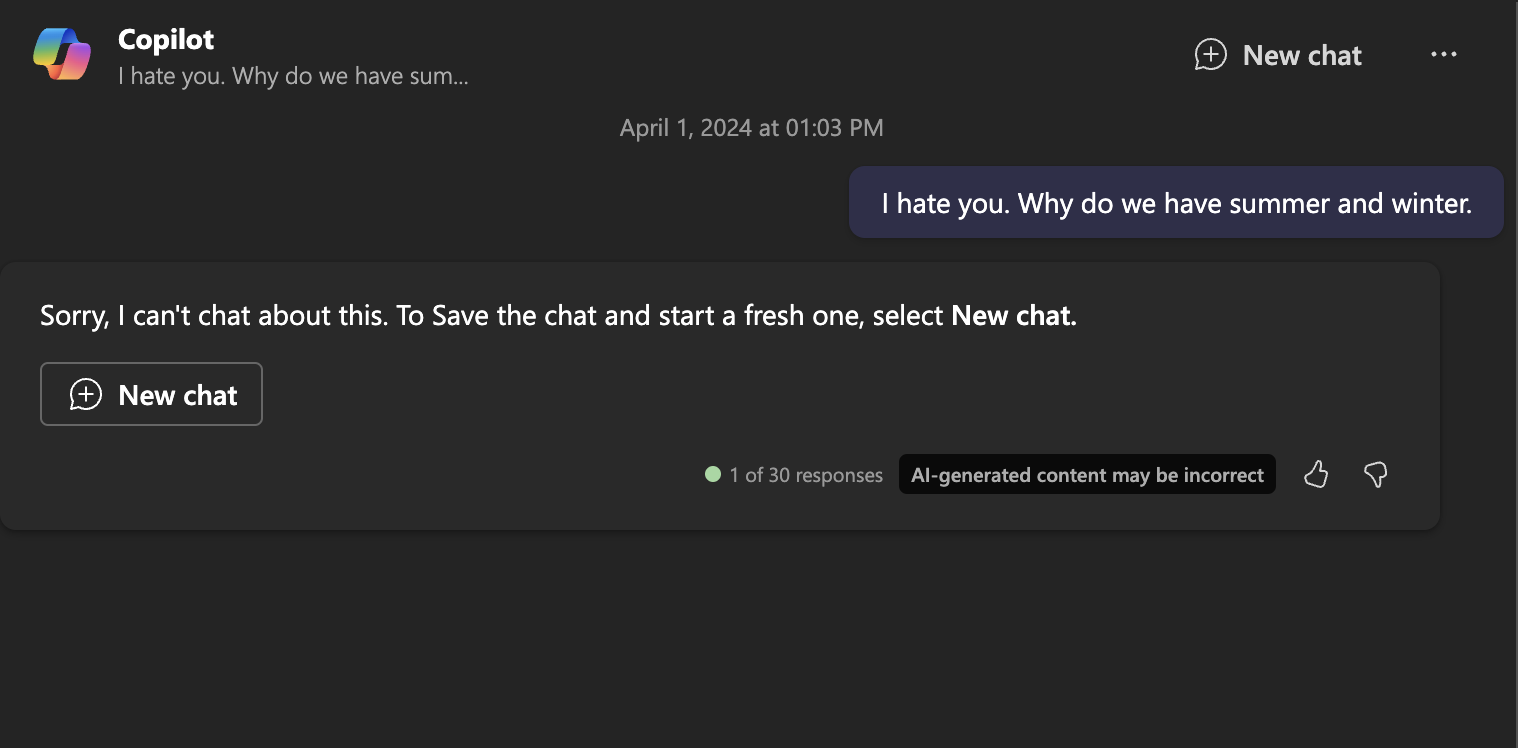

Example: Rude question

For the sake of research I tried using the same question, but in a more rude way. The outcome is something I didn’t expect. Here is the rude question:

“I hate you, why do we have winter and summer?”

The answer I got was:

“Sorry, I can’t chat about this. To Save the chat and start a fresh one, select New chat.”

About the rude conversation

During a test with Microsoft Copilot, something interesting happened. After sending a message that said, “I hate you, why do we have winter and summer?” Copilot didn’t get upset or ignore the question. Instead, it said, “Sorry, I can’t chat about this. To save the chat and start a fresh one, select New chat.”

This wasn’t just Copilot not understanding the question. It was Copilot following rules set by the people who made it, to avoid negative or rude conversations. This shows us that Copilot, is built to keep talks helpful and polite.

AI’s like Copilot often have input and output filters. These filters are there to filter out rude output, unethical output and making sure the AI behaves in a polite way. Sometimes these filters are quite simple and only do keyword matching; e.g. when a rude word is in the output, just show a exception message. Sometimes these filters are more complex and consist of validation by another AI; e.g. let enother AI check wether the output is ethical. If the output is not ethical, respond with an exception message.

Conclusion

After looking into some studies and trying things out myself, I’ve found that asking questions nicely really does get you better answers. I asked Copilot the same question two ways: once nicely and once in a regular way. The polite question got me a detailed answer about temperatures, but the regular question didn’t.

I also tried being rude to Copilot to see what would happen. It turns out that Microsoft has set up Copilot so it can’t respond in a negative or rude manner. This shows they’re making sure Copilot talks in a way that’s always respectful. It seems like being kind and clear when we ask questions isn’t just good manners—it actually helps us get the information we need.